UAV Navigation Using Reinforcement Learning

What:

This was the course project for my graduate course Decision Making Under Uncertainty. My team and I aimed to develop an effective navigation system for UAVs using a reinforcement learning algorithms. This project was an opportunity for us to demonstrate our understanding of the various algorithms covered in the class.

Why:

Autonomous systems have great potential to revolutionize a wide range of industries, from agriculture to defense. To support the growth of these systems, sophisticated path-planning algorithms must be developed to allow for efficient and safe trajectories, especially in uncertain environments like urban cities, where there can be hundreds of moving obstacles.

Many algorithms used today are bio-inspired or sampling-based; however, learning algorithms—due to their ability to adapt to different environments—may have potential for future autonomous systems.

How:

We created an autonomous navigation system using Clipped Proximal Policy Optimization (PPO) as the base algorithm. We compared the performance of our PPO-based system to a heuristic-based algorithm (i.e., bug algorithm). We also tested the navigation system in unseen environments to see if it could generalize to environments that it did not train on.

Our algorithm was successfully able to navigate in both its training and testing environments. It was also able to outperform the bug algorithm in terms of time-steps taken.

Simulation Environment

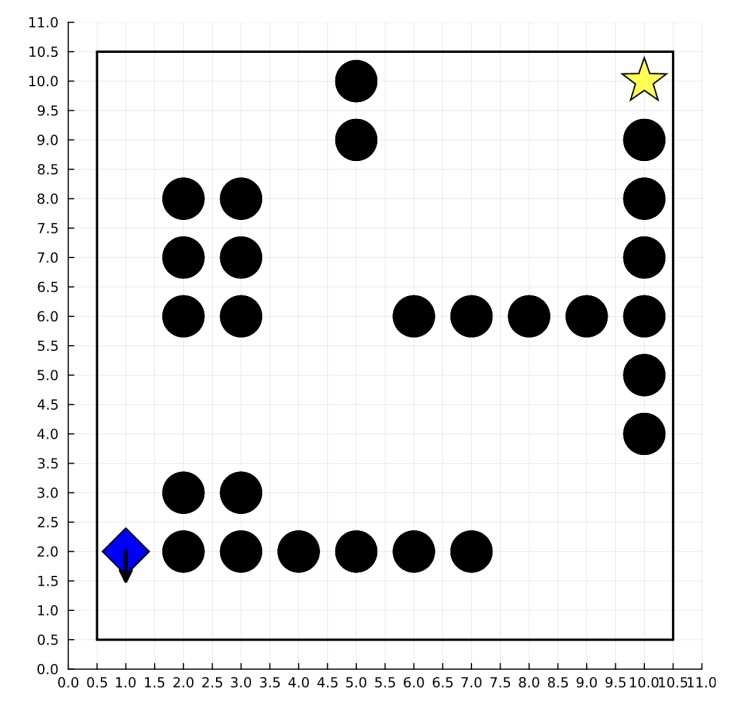

There were two different simulation environments for this project. The first was a simple, grid-world environment which was easily solved using value iteration.

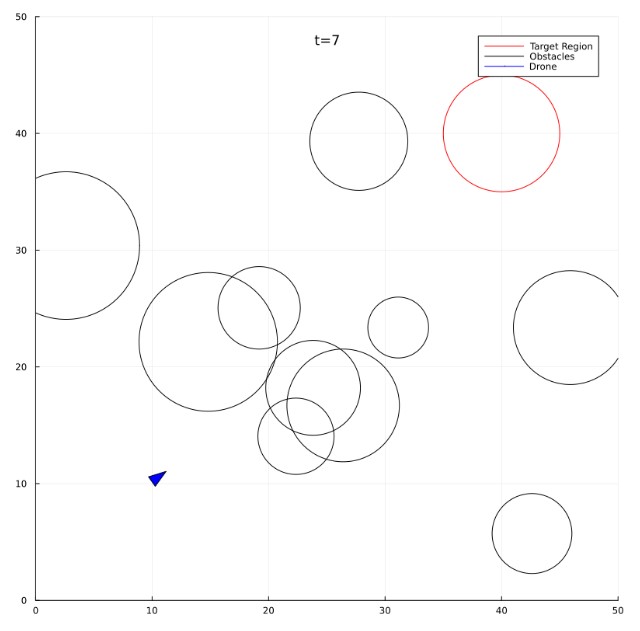

The second, more complex environment, featured continuous state and action spaces, as well as stochastic dynamics which were propagated using Euler integration. We wanted the simulation environment to more closely resemble a real-world application.

The complex environment also features randomly generated obstacles. But, the location of the goal region is fixed between generations.

Navigation Performance

One of the bigget challenges for this project was implementing PPO from scratch in Julia. While there are many well-implemented libraries that we could have used, we felt that we could take our understanding of these algorithms to the next level by developing a custom implementation.

The drone only has information about the location of the goal, its own position, and limited sensing capabilities close to itself. With just this information, the drone was able to generalize to a completely new testing environment and successfully reach the goal.